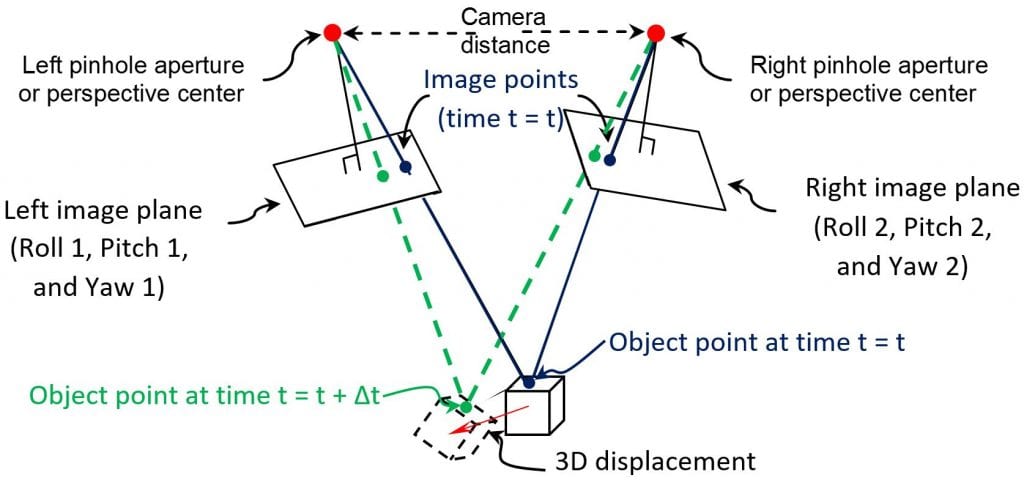

Photogrammetry and 3D-DIC have proven to be useful approaches to perform full-field SHM,NDE, as well as structural dynamic measurement on numerous structures. Modern stereophotogrammetry involves a pair of digital cameras that are calibrated to determine their relative position (i.e., cameras’ mutual distance and spatial orientation) and to account for the internal distortion parameters of each lens.

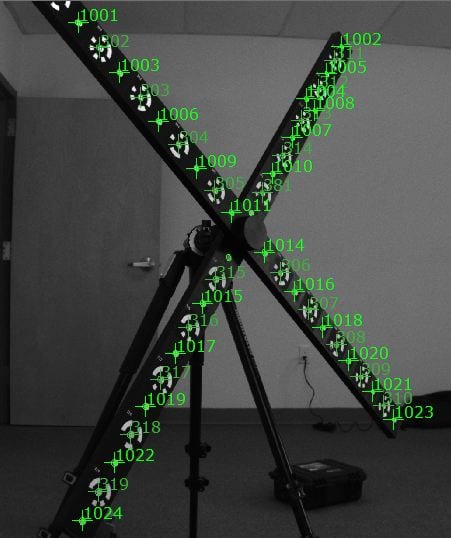

A calibration for fields of views up to ~2 meters is performed by taking several pictures of NIST-traceable calibration objects (e.g., panels or crosses) containing optical coded targets whose positions are previously well-known. Then through a process known as bundle adjustment is used to establish the precise relationship between the two cameras.

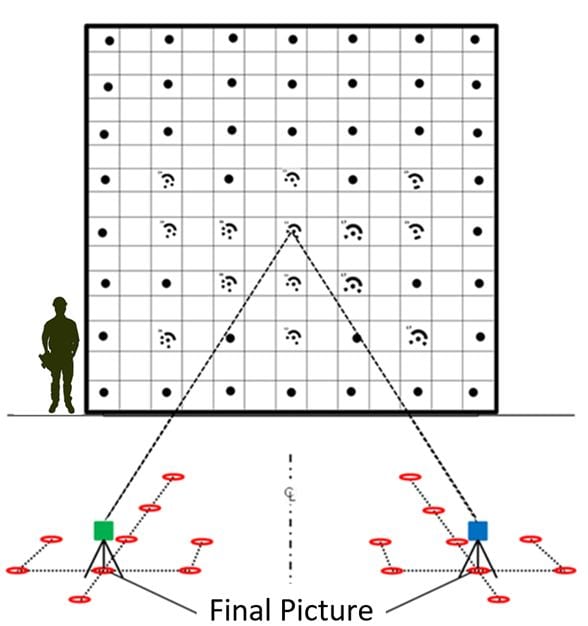

As the dimensions of the targeted object increases, a more complicated procedure needs to be performed (i.e., large-area calibration). This operation is arduous as it involves the use of coded and un-coded targets that have to be placed on an area having dimensions comparable to that of the object to be tested. Moreover, calibration itself is time-consuming because the distance between several pairs of targets needs to be measured to be used as scale bars, and because each camera must be independently calibrated before acquiring the last picture with the two cameras placed in their final position for calculating the relative geometry of the stereo-vision system. Once the final image is taken, the location of one camera with respect to the other cannot change, otherwise, it will affect the calculated extrinsic parameters values and it would result in a loss of calibration.

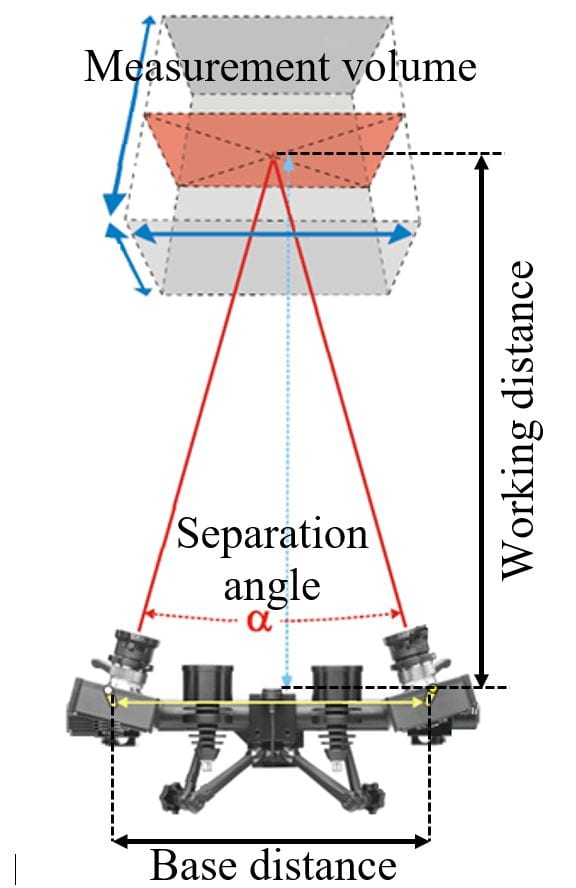

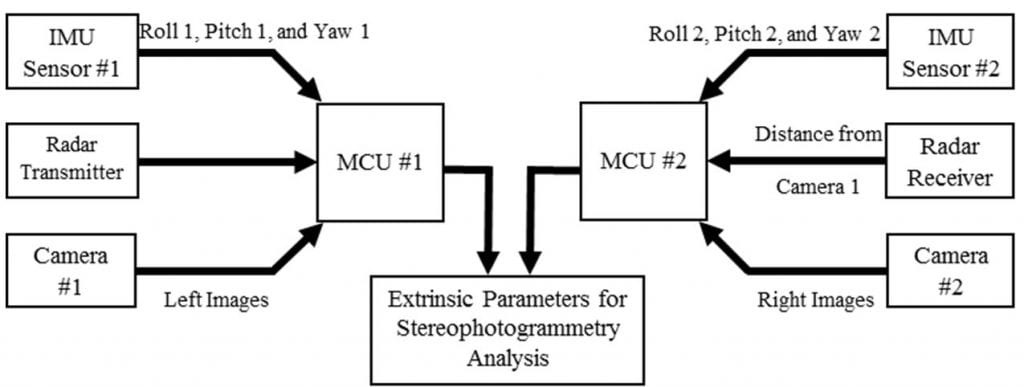

To eliminate the biggest hurdle of 3D-DIC systems, which is the time-consuming calibration to determine the relative position of two mobile cameras, a wireless multi-sensor system has been developed. This sensing system acquires and records seven degrees-of-freedom (DOFs) information between a pair of mobile cameras and the data can be used to determine the relative position of the cameras in space that is necessary to perform 3D-DIC or three-dimensional point-tracking (3DPT).

By using a MEMS-based Inertial Measurement Unit (IMU) and a 77 GHz radar embedded on a microcontroller unit (MC) installed on each of the cameras, the seven DOFs needed to identify the cameras relative position can be calculated. The 7 DOFs include: (1) the distance between the cameras, (2, 3, 4) roll, pitch, and yaw of camera #1, and (5, 6, 7) roll, pitch, and yaw of camera #2.

The developed approach will make it possible to triangulate the recorded images, calibrate each picture taken independently, and perform quick 3D-DIC measurements over extensive areas without having to perform traditional calibration procedures that rely on large calibration panels or surfaces. It also means that the cameras can move around an object while not upsetting the calibration requirement to enable measurement from multiple fields of view and extending the use of 3D-DIC to UAVs inspection as well.

A number of laboratory experiments have shown that the accuracy of the radar in measuring distances in a range from 1 to 8 meter is on average equal to 2.46·10-3 m, while the IMU can detect change in the orientation with an accuracy of ~1° when a back-to-back comparison with an inclinometer embedded in a smartphone is performed. An improved set of experiments has to be planned to better characterize the performance of the IMU and radar systems that can get rid of all the systematic errors that may have described the current version of the tests.

The publication describing details of this research can be downloaded using the following link: