Overview of Accessible Interfaces for Robot Teleoperation

Description of interfaces paper SE2 and SE3

Links to Demo

Overview of Accessible Teleoperation of Mobile Manipulators in Home environment

Description of Stretch paper

Overview of Handovers

Description of handover paper

Overview of Assistive Feeding for users with mobility limitations

Description of ADA papers

Overview of Attitudes towards Social Robots with Teenagers

Description of EMAR collaboration

Overview of One-Shot Gesture Recognition

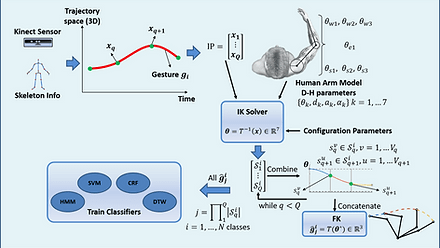

Humans are able to understand meaning intuitively and generalize from a single observation, as opposed to machines which require several examples to learn and recognize a new physical expression. This trait is one of the main roadblocks in natural human-machine interaction. Particularly, in the area of gestures which are an intrinsic part of human communication. In the aim of natural interaction with machines, a framework must be developed to include the adaptability humans portray to understand gestures from a single observation.

This framework includes the human processes associated with gesture perception and production. From the single gesture example, key points in the hands’ trajectories are extracted which have found to be correlated to spikes in visual and motor cortex activation. Those are also used to find inverse kinematic solutions to the human arm model, thus including the biomechanical and kinematic aspects of human production to artificially enlarge the number of gesture examples.

Leveraging these artificial examples, traditional state-of-the-art classification algorithms can be trained and used to recognize future instances of the same gesture class.

Overview of STAR

With the System for Telementoring with Augmented Reality (STAR), we aim to increase the mentor’s and trainee’s sense of co-presence through an augmented visual channel that will lead to measurable improvements in the trainee’s surgical performance. The goal of the project is to develop a system which can effectively provide remote assistance to novel surgeons or trained medics, under less than ideal scenarios, like rural areas or austere environments like the battlefield. The system connects the novel surgeon or medic to a remote expert who may be in a Trauma 1 hospital in a major US city.

There are three more areas where the proposed system has the potential to improve quantitative and qualitative outcomes in the military healthcare setting. First, telementoring can avoid or diminish the loss of surgical skills through retraining and competence. Second, it will be useful to provide instructional material in a simulation form, to support doctors serving in Iraq and Afghanistan in traumatic care in the battlefield, in a portable and dynamic style. Finally it will allow recent combat medic graduates to reinforce surgical techniques that were not conceptualized during their training curricula.

Overview of Taurus Tele-operation Project

Current teleoperated robot-assisted surgery requires surgeons to manipulate joystick-like controllers in a master console, and robotic arms will mimic those motions on the patient’s side. It is becoming more popular compared to traditional minimally invasive surgery due to its dexterity, precision and accurate motion planning capabilities. However, one major drawback of such system is related with user experience, since the surgeon has to retrain extensively in order to learn how to operate cumbersome interfaces.

To address this problem, we have developed an innovative system to involve touchless interfaces for telesurgery. This type of solution, when applied to robotic surgery, has the potential to allow surgeons to operate as if they were physically engaged with the surgery in-situ (as standard in traditional surgery). By relying on touchless interfaces, the system can incorporate more natural gestures that are similar to instinctive movements performed by surgeons when operating, thus enhancing the user experience and overall system performance. Sensory substitution methods are used as well to deliver force feedback to the user during teleoperation.